The Next AI Frontier is at the Edge

The year 2024 was pivotal for AI, marked by breakthroughs not just in generative models but also in deploying efficient, small-scale models on edge devices. As AI systems grow in complexity, they face a fundamental challenge: balancing performance with hardware constraints. For example, based on current knowledge and analysis, OpenAI’s GPT-4 is estimated to require over 1,000 petaFLOPS of compute. In comparison, edge devices typically operate at less than 1 TFLOP—sometimes only reaching the range of gigaFLOPS.

Traditional cloud-based AI approaches are increasingly limited by high latency, bandwidth constraints, and privacy risks, creating bottlenecks for real-time applications like autonomous vehicles and healthcare systems. These limitations demand a shift toward Edge AI, which processes data locally on devices, unlocking real-time capabilities while addressing privacy and efficiency concerns.

This article explores the engineering foundations of Edge AI, focusing on hardware optimizations, deployment pipelines, and real-world computer vision applications. By tackling challenges in scalability, efficiency, and security, Edge AI is poised to unlock the next frontier in artificial intelligence.

Introduction to Edge AI

Edge AI involves processing data locally on devices, reducing inference cost, offering faster decision-making, and enhanced security.

The Four Key Advantages of Edge AI:

- Cost-Effective: Reduces recurring expenses by minimizing reliance on cloud infrastructure.

- Efficient: Accelerates decision-making and improves energy efficiency by utilizing local computational power.

- Private & Secure: Processes data locally, reducing exposure to breaches and maintaining user privacy.

- Personalized: Supports adaptive learning and customization without requiring external data transfers.

Cloud vs. Edge Computing vs. Edge AI

- Cloud Computing: Centralized systems process data remotely, requiring high-bandwidth communication with servers.

- Edge Computing: Decentralizes processing by handling data closer to its source, minimizing transmission overhead.

-

Edge AI: Combines edge computing with artificial intelligence, enabling intelligent, localized decision-making on devices like IoT sensors, drones, and smartphones.

- Additional Resources: Edge AI Core Concepts

Why Edge AI is Becoming Crucial

CrowdStrike Global Outage (Source: Link)

Cloud computing has long powered AI systems, but its inherent limitations—latency, bandwidth dependency, and vulnerability to outages—pose critical challenges for real-time, mission-critical applications. These shortcomings are becoming increasingly apparent as systems scale.

Consider the 2024 CrowdStrike outage, which rendered 8.5 million Windows systems unusable with the infamous “Blue Screen of Death.” Major companies, including airlines, financial institutions, and even the London Stock Exchange, faced significant disruptions, collectively incurring an estimated $10 billion in damages. Similarly, a global ChatGPT outage caused widespread service interruptions, underscoring the fragility of cloud-dependent infrastructures.

These incidents highlight the risks of over-reliance on centralized systems for AI deployment. Edge AI mitigates these risks by processing data locally, enabling real-time responses, reducing reliance on network stability, and enhancing data privacy. For use cases like autonomous vehicles or industrial automation, where latency and reliability are non-negotiable, Edge AI provides a robust alternative to traditional cloud solutions.

Key Components of Edge AI

Hardware Requirements

Optimized Hardware Platforms: Edge devices rely on specialized processors like Vision Processing Units (VPUs) and Neural Processing Units (NPUs). These components enable high-performance AI computation within the constraints of low power budgets, accommodating diverse workloads ranging from lightweight inference to compute-intensive vision tasks.

Model Optimization Techniques: Running AI models on resource-constrained devices requires aggressive optimization. Techniques such as quantization (lowering precision without losing accuracy) and pruning (removing unnecessary model layers) are critical. These approaches reduce computational load while maintaining operational performance.

Energy Efficiency: Power constraints are a defining challenge in edge environments. Energy-efficient AI models are vital for extending battery life in devices like wearables and IoT sensors. This is achieved through hardware-software co-design, ensuring that AI models utilize minimal power during inference without compromising performance.

- Additional Resources: Edge-AI Hardware Platforms

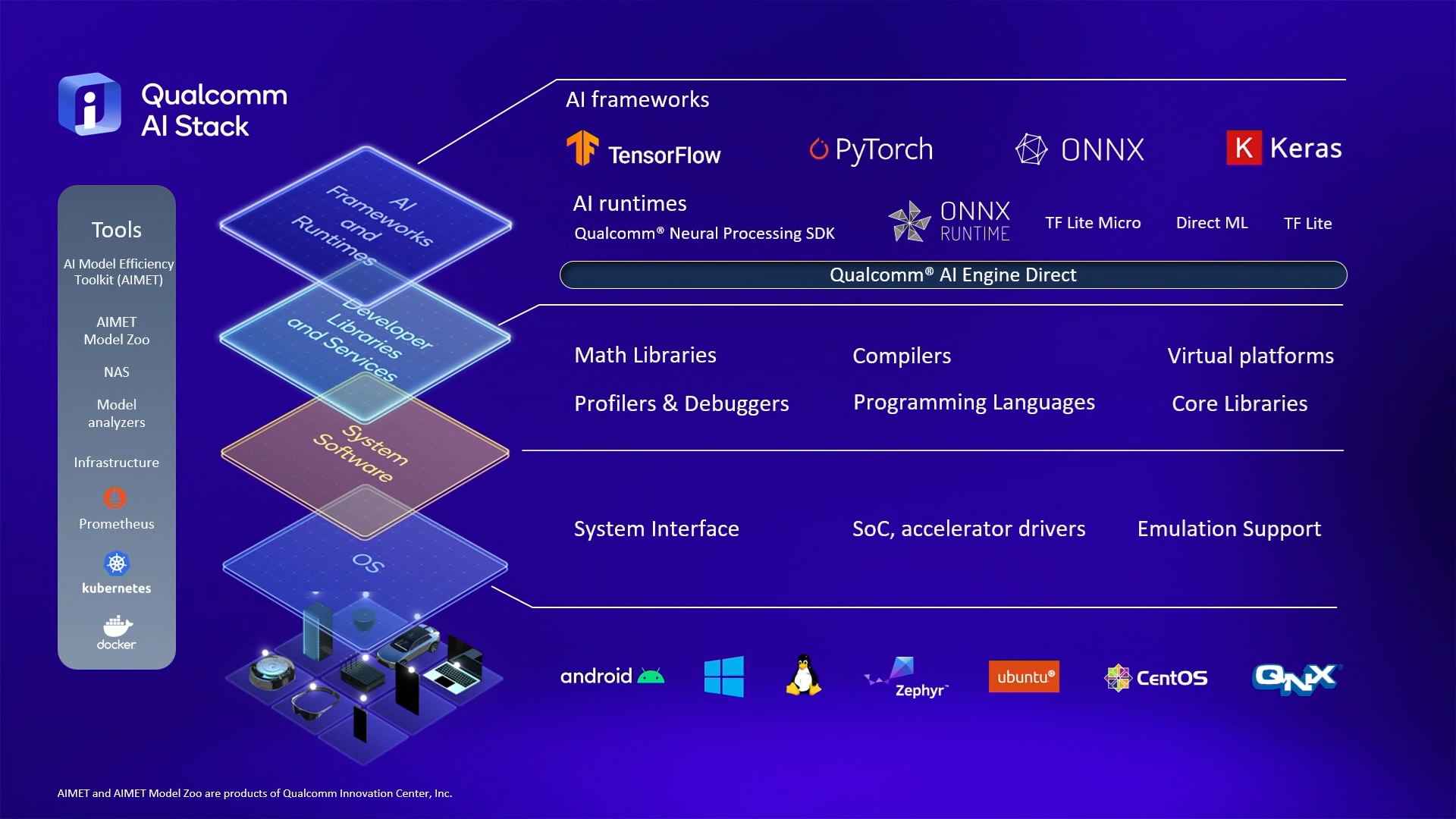

End-to-End Edge AI Stack

The Qualcomm AI Stack. (Source: Link)

The Edge AI stack comprises layers that work together to deliver optimized performance for real-world applications:

| Layer | Components |

|---|---|

| Applications | Object detection, image segmentation, landmark detection, image generation, speech recognition |

| AI Frameworks and Runtimes | TensorFlow, PyTorch, ONNX, Qualcomm Neural Processing SDK, AI Engine Direct, ONNX Runtime, Direct ML, LiteRT |

| Developer Tools | Profilers, debuggers, compilers, math libraries, SoC accelerators, emulation support |

| System Software | SoC and accelerator drivers, system interfaces, virtualization layers |

| Operating Systems | Android, Windows, Linux, Zephyr, Ubuntu, QNX |

| Hardware | CPU, GPU, NPU, sensing hubs |

- Additional Resources: End-to-End Edge AI Deployment Pipeline, Edge AI Frameworks, Edge AI Model Zoo

Applications and Use Cases

Edge AI has become indispensable across industries where real-time decision-making, efficiency, and privacy are critical. Below are key sectors leveraging Edge AI for transformative impact:

Smart Devices and Wearables

Edge AI powers real-time data processing in devices like smartwatches and fitness trackers, enabling precise health monitoring and personalized feedback. By processing data locally, these devices eliminate latency and enhance privacy, making them reliable tools for fitness enthusiasts and medical monitoring alike.

Healthcare Monitoring

Edge AI facilitates continuous, real-time patient monitoring through wearable sensors and connected medical devices. By detecting anomalies early, such as irregular heartbeats or oxygen saturation drops, these systems support timely interventions and improve patient outcomes without requiring cloud dependency.

Industrial IoT and Manufacturing

In manufacturing, Edge AI transforms operational efficiency through predictive maintenance and real-time quality control. Sensors on production equipment analyze data on-site, identifying potential failures before they occur. Meanwhile, edge-based defect detection ensures product quality, reducing waste and operational downtime.

Autonomous Vehicles

Self-driving vehicles demand split-second decision-making. Edge AI processes high volumes of sensor data—such as lidar, radar, and cameras—directly within the vehicle, eliminating the delays of cloud communication. This ensures reliable navigation, collision avoidance, and adaptability in dynamic environments, critical for passenger safety.

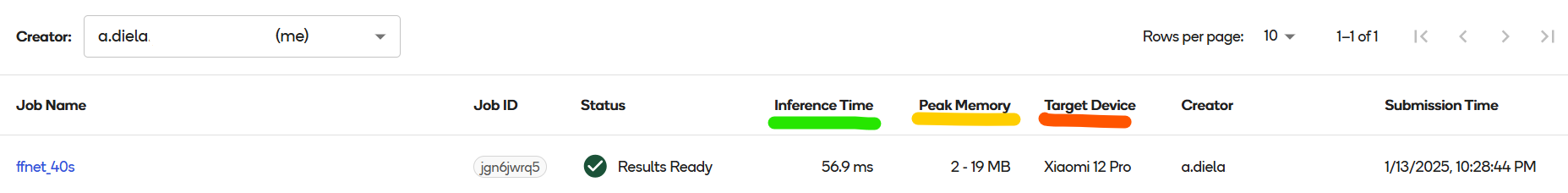

Case Study: Real-Time Segmentation Deployment Using Qualcomm AI Hub

This case study demonstrates how to deploy a semantic segmentation model optimized for edge devices using Qualcomm AI Hub in just four simple steps. The example leverages FFNet, a model tailored for efficient edge-based semantic segmentation, tested on the Cityscapes dataset.

Applications like autonomous driving, augmented reality, and mobile robotics require real-time segmentation capabilities, making this deployment pipeline crucial for performance-critical scenarios.

Deployment Steps

- Configure Qualcomm AI Hub

Begin by setting up your Qualcomm AI Hub environment for deployment:import qai_hub ai_hub_api_token = get_ai_hub_api_token() !qai-hub configure --api_token $ai_hub_api_token - Load and Summarize the Model

Let’s load the pre-trained FFNet model and analyzing its architecture for key metrics:from qai_hub_models.models.ffnet_40s import Model model = Model.from_pretrained() input_shape = (1, 3, 1024, 2048) stats = summary(model, input_size=input_shape, col_names=["num_params", "mult_adds"]) - Evaluate FFNet Variants

We can test high-resolution and low-resolution variants of FFNet to determine the best fit for your device’s constraints:# Example: High-resolution variant from qai_hub_models.models.ffnet_40s import Model # Example: Low-resolution variant # from qai_hub_models.models.ffnet_78s_lowres import Model - Benchmark Performance

We run cloud-based and on-device simulations to benchmark the model:# Cloud-based demo %run -m qai_hub_models.models.ffnet_40s.demo # Simulate edge deployment %run -m qai_hub_models.models.ffnet_40s.export -- --device "Xiaomi 12 Pro" - Optimize and Deploy

Finally, we profile the model for edge execution, optimize it for hardware constraints, and deploy it to the device.# Compile for real device deployment %run -m qai_hub_models.models.ffnet_40s.demo -- --device "Xiaomi 12 Pro" --on-deviceOutcome: Analysis and Interpretation

FFNet_40s profiling job on Qualcomm AI Hub

This step-by-step pipeline demonstrates the trade-offs between performance and efficiency in cloud vs. edge deployments. By optimizing models like FFNet, you can achieve robust, real-time inference capabilities on resource-constrained devices. Below is a summary of the model’s performance on the target platforms for a single inference.

| Target | Inference time (ms) | Peak Memory Usage (MB) | Interpretation |

|---|---|---|---|

| Cloud Server | 0.005* | 1777* | The cloud server provides the baseline performance. |

| Hosted Device | 60.0 | 7 - 22 | The hosted device has higher inference time and Peak Memory Usage compared to the cloud server. |

| Physical Device | 58.6 | 5 - 23 | The physical device demonstrates similar inference time and peak memory usage compared to the hosted device, highlighting a strong correlation between the simulation and the real device. |

Check out the full notebook code: Here

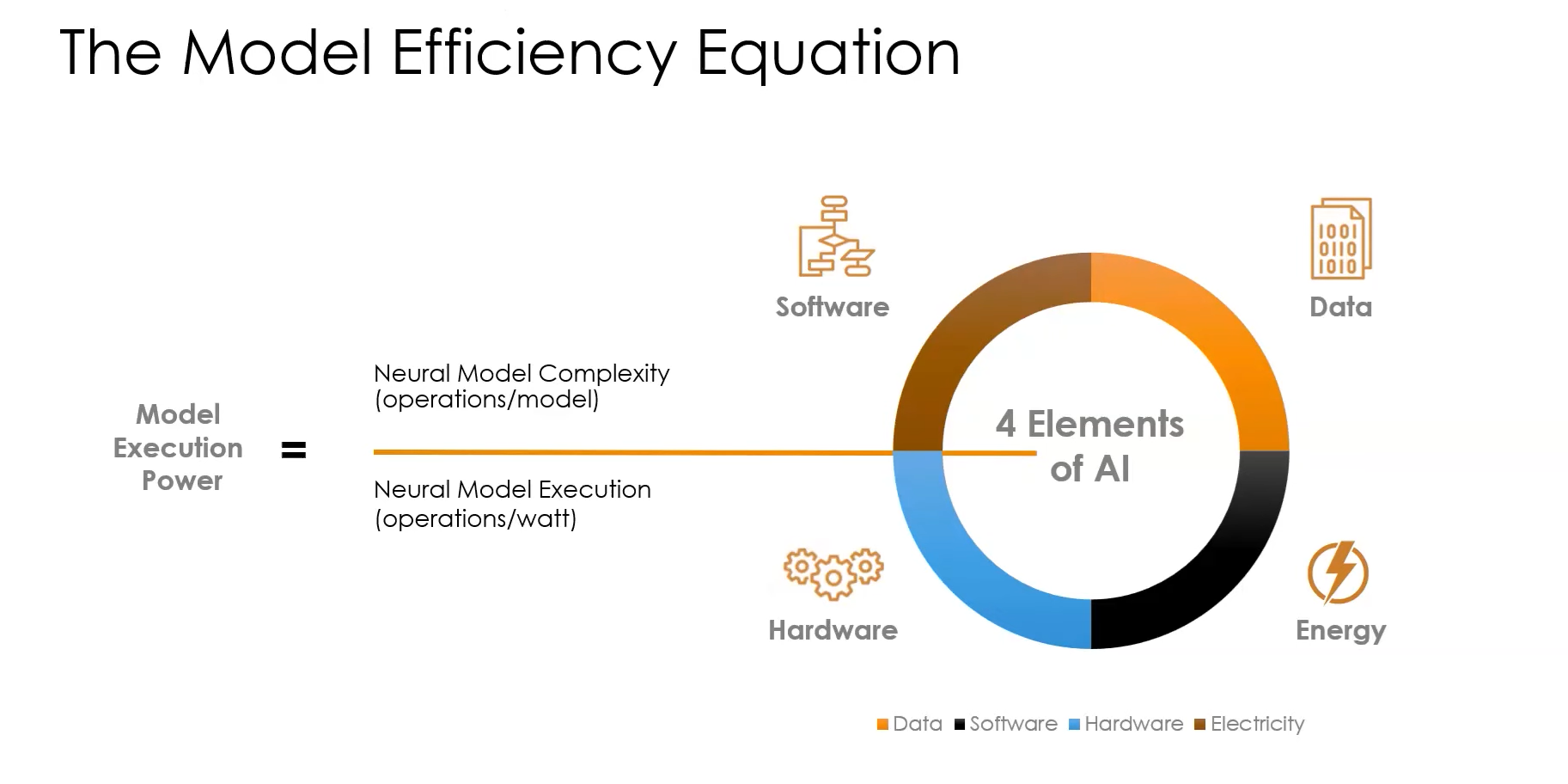

Challenges and Limitations

The model efficiency equation - BrainChip

Computing Power Constraints

Unlike centralized cloud servers with virtually unlimited resources, edge devices operate under tight power and hardware constraints. Running compute-intensive AI models on devices with limited processing power often requires significant trade-offs in latency and throughput.

Model Accuracy vs. Efficiency

Striking a balance between accuracy and efficiency remains a core challenge in edge deployments. High-accuracy models often demand greater computational resources than edge devices can provide. Techniques such as knowledge distillation, pruning, and quantization are essential to deliver models that are both effective and deployable in resource-constrained environments.

Security Concerns

Edge AI’s localized processing enhances privacy by keeping sensitive data on devices, but it also introduces new vulnerabilities. Threats such as firmware attacks, unauthorized access, and malware targeting edge endpoints can compromise the integrity of deployed systems. Robust security measures, including encryption, hardware-based security modules, and regular updates, are critical to safeguarding edge AI deployments.

Future Developments

Edge AI is evolving rapidly, driven by advancements in hardware, software, and deployment paradigms. These developments are shaping the future of real-world AI applications.

Emerging Technologies

BrainChip Akida: An Event-Based Neuromorphic AI Processor

The next generation of Edge AI systems will benefit from co-designed AI models and hardware accelerators, enabling highly efficient end-to-end deployments. Startups and industry leaders alike are exploring novel methods to reduce costs and power consumption, critical for scaling edge solutions.

One promising direction is federated learning, which trains AI models across distributed devices while keeping data localized. This paradigm not only enhances privacy but also minimizes the need for large-scale data transfers.

- Additional Resources: Federated Learning

Industry Trends

Major players are pivoting their strategies to capitalize on the growing importance of Edge AI:

- Intel: Introduced Tiber AI Cloud to bridge cloud-scale development with edge deployment.

- NVIDIA: Launched the NIM platform, offering full-stack optimization for edge AI computation.

- Google: Rebranded TensorFlow Lite as LiteRT, simplifying deployment for mobile, web, and embedded edge applications.

-

Qualcomm: Expanded its AI Hub with over 75 optimized models for on-device applications.

- Further Reading: Edge AI Players & Ecosystems

Conclusion

Edge AI is reshaping how artificial intelligence integrates into our daily lives and industries. By moving computation closer to the source, it overcomes the limitations of cloud computing, enabling real-time, efficient, and secure AI applications.

The journey of Edge AI is just beginning. With advancements in hardware efficiency, federated learning, and robust deployment pipelines, the potential to unlock transformative applications in healthcare, automotive, and IoT is immense. As we refine this technology, Edge AI will stand at the forefront of intelligent systems for years to come.

References

- [1] Machine Learning Systems - Principles and Practices of Engineering Artificially Intelligent Systems, Vijay Janapa Reddi

- [2] GPT-4 Architecture, Infrastructure, Training Dataset, Costs, Vision, MoE - Semianalysis

- [3] AI and compute, 2018 - OpenAI

- [4] What Is Edge Computing? - NVIDIA

- [5] BrainChip Akida Platforms