Portfolio

Explore my cutting-edge computer vision solutions addressing real-world challenges in smart industries such as autonomous systems, healthcare, agriculture, and more.

Computer Vision Real-World Applications

Classification Stack: CNN, ViT

CIFAR-10 Image Classification

Using Convolutional Neural Networks (CNNs) to classify images of different types of objects from the CIFAR-10 dataset.

Object Detection Stack: 2D, 3D

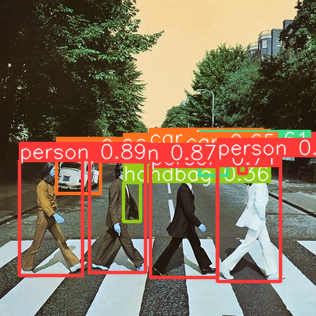

2D Object Detection with YOLOv5

Multimodality (VLMs: GANs, VAEs, Diffusers …)

AI Assisted Image Editing and Manipulation

This is an AI Image Editing and Manipulation tool for Image creation, editing and manipulation.

Multimodal AI Storyteller

Generate text and audio stories from image.

Motion & Video Analysis: Tracking & Flow

2D Object Tracking with OpenCV and Deep Learning

![]()

Perception

2D Object Detection with YOLO for Autonomous Vehicles

Apply the YOLO (You Only Look Once) model series to detect and classify objects such as pedestrians, vehicles, and traffic signs in real-time. It uses real-world camera and LIDAR data from Lyft 3D Object Detection dataset for autonomous vehicles.

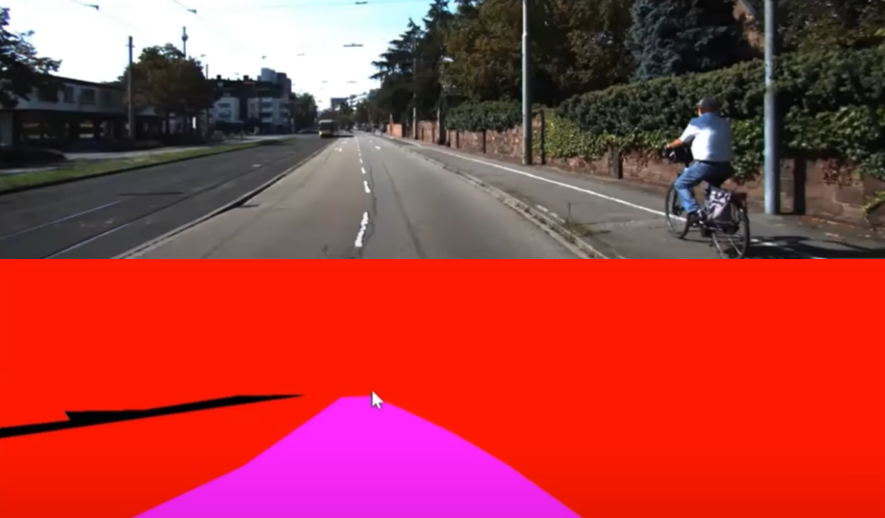

Road Segmentation with Fully Convolutional Networks (FCN)

Implement an Fully Convolutional Networks (FCN) model to perform pixel-wise classification, enabling the vehicle to distinguish drivable road areas from obstacles. It gets real-world visual data from KITTI Dataset.

Realtime Multi-Object Tracking with DeepSORT

Integrate the DeepSORT model to track the trajectory of detected objects across video frames, maintaining consistent identification.

3D Object Detection with SFA3D and KITTI

Utilize the SFA3D model to detect objects in 3D space using LiDAR data from KITTI dataset, crucial for understanding the vehicle’s surroundings.

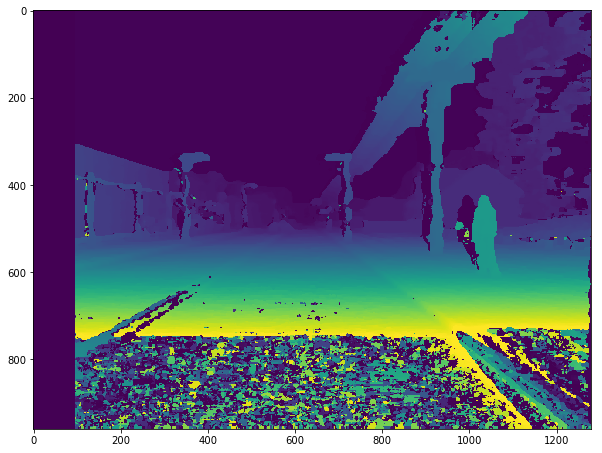

3D Data Visualization and Homogeneous Transformations

Visualize and manipulate 3D point cloud data from LiDAR sensors (KITTI dataset), applying homogeneous transformations to align data from multiple sensors.

Camera to Bird’s Eye View Projection with UNetXST

Develop a model to transform camera images into a bird’s eye view, aiding in better spatial understanding for navigation.

Multi-Task Learning with Multi-Task Attention Network (MTAN)

Implement a Multi-Task Attention Network (MTAN) on CityScapes Dataset, to simultaneously perform tasks like road segmentation and object detection, improving computational efficiency.

Multimodal Sensor Fusion with GPS, IMU, and LiDAR for Vehicule Localization.

This project involved integrating data from multiple sensors to accurately determine a vehicle’s position and motion on the roadway. The system uses techniques such as Kalman filtering to combine inputs from GPS, IMU, and LiDAR, enhancing the precision of state estimation critical for autonomous driving applications.

Additional Resources: Linear/Non Linear KF Implementation.

Depth Perception for Obstacle Detection on the Road

Implemented stereo depth estimation using Python and OpenCV on CARLA simulator images to calculate collision distances in a driving scenario.

Additional Resources: Learn core concepts here.

Visual Odometry (VO) for Self-Driving Car Location

This is a visual odometry system that estimates the vehicle’s trajectory using realtime visual data captured by its (monocular) camera.

Edge AI

Edge AI involves processing data locally on devices, reducing inference cost, offering faster decision-making, and enhanced security.

- Further Reading: The Next AI Frontier is at the Edge

- Learning Resources: Curated Edge AI Technical Guides

Real-time Segmentation Deployment with Qualcomm AI Hub

This case study demonstrates how to deploy a semantic segmentation model optimized for edge devices using Qualcomm AI Hub. The example leverages FFNet, a model tailored for efficient edge-based semantic segmentation, tested on the Cityscapes dataset.

Industry Applications: Autonomous Driving, Augmented Reality, and Mobile Robotics

Case Studies

Autonomous Systems (Self-Driving Cars/ADAS, Robotics, UAVs)

Self-Driving Car Environment Perception

Self-Driving Car foundational perception stack, which extracts useful information from its surroudings and perform complex tasks in order to drive safely through the world

End-to-End Self-Driving Car Behavioral Cloning

End-to-End self-driving car behavioral cloning implementation based on NVIDIA End-to-End Learning paper using computer vision, deep learning, and realtime visual data from Udacity Self-Driving Car simulator.

Smart Cities

⚡ SmartMeterSim: IoT Smart Meter Simulation

⚡ SmartMeterSim is a production-ready IoT solution for real-time energy monitoring and optimization for smart grids, buildings, and Edge-to-Cloud applications.